I've shown this plot a couple of times already, but here it is again, with another that shows the bar we have to limbo under to win $3 million.

Anyone think this is possible?

Any predictions of what the final winning error will be?

I predict 0.453

Place your bets here...

Monday, 26 December 2011

Saturday, 17 December 2011

What's Going On Here

In many of the analytics problems I have been involved in, the problem you end up dealing with is not the one you initially were briefed to solve.

These new problems are always discovered by visualising the data in some way and spotting curious patterns.

Here are a three of examples...

1. Algorithmic Trading Challenge

The Algorithmic Trading Challenge is based on data from the London Stock Exchange and is about things called 'Liquidity Shocks'. I know nothing about these but we had data, so the first thing I did was plot a few graphs to see id I could get a better understanding of things.

The plot below shows the times these 'Liquidity Shocks' occur.

Now it is quite clear there is something going on at 1pm, 2:30pm, after 3:30pm and at 4pm.

Interestingly these spikes are only evident when all commodities are looked at together, they are not as obvious in any individual commodity.

My first question if I was solving a business problem would be to return to the business to get more insight in what was going on here. My initial thoughts were lunch breaks and the opening times of other Stock Exchanges around the world - as 3:30pm London time could be around opening time in New York.

Understanding the cause of these peaks is important as you would expect the reaction to them (the problem to solve) to be a function of the cause.

If we did discover it was the opening times of other exchanges, then I would ask for extra information like the specific dates, so I could calculate when these peaks would occur in the future when the clocks changed. We do not have this information at the current time, or even the day of the week (it can be inferred but not accurately as there will be public holidays when the exchanges are closed)

As it stands any models built could potentially fail on the leaderboard (or real life) data as our model might think 2:30pm is a special time, wheras really it is when another exchange opens, or when people come back from lunch. We need this causal information rather than just dealing with the effect - time differences change - lunch breaks may change.

The current competition data is potentially lacking the full information required to build a model that is as robust as possible over time.

2. Interesting Distributions

One of the first things I do when receiving a data set is to scan the distributions of all variables to sanity check them for anything that looks out of place - but still things can sneak past you.

The following is exam mark data in the range 0-100. If we bin it in 20 bins then things look reasonable, but if we zoom in then we get the 'what is going on here' question again. It is quite clear what is going on, but if exam marks is the thing we are trying to predict, how do we deal with this phenomenon and how would our algorithm cope looking at it blindly? And what if the pass mark changed or rules changes - the algorithm would fail. Again, we need to be aware of the underlying root cause and not just the effect.

3. Don't Get Kicked

This is another Kaggle Competition...

Kicked cars often result when there are tampered odometers, mechanical issues the dealer is not able to address, issues with getting the vehicle title from the seller, or some other unforeseen problem. Kick cars can be very costly to dealers after transportation cost, throw-away repair work, and market losses in reselling the vehicle.

Modelers who can figure out which cars have a higher risk of being kick can provide real value to dealerships trying to provide the best inventory selection possible to their customers.

The challenge of this competition is to predict if the car purchased at the Auction is a Kick (bad buy)

This is a binary classification task and a quick way to spot data issues with this type of problem is to throw it in a decision tree in order to spot what are called 'gimmees'. These are cases that are easily perfectly predictable and are more than often a result of giving prediction data that just shouldn't be there as it is not known at the time (future information) - an extraction issue that would result in a useless model (It is common that people think they have built really good predictive models using future information without really questioning why their models are so good!).

Another reason 'gimmees' occur are poorly defined target variables, that is not excluding certain cases (and example in target marketing would be not excluding dead people from your mailing list and then predicting they won't respond to your offer!)

After a bit of data prep I threw the Don't Get Kicked Data into a Tiberius Decision Tree - the visual below immediately tells me there are clear cut cases of cars that will be kicked - it is almost black and white.

These 'gimmees' can be described by the rules...

[WheelTypeID] = 'NULL' AND [Auction] <> 'MANHEIM'

MANHEIM is an auctioneering company where cars are auctioned - there are 2 main auctioneers in the data set plus 'other'.

Having worked extensively with car auction data before I know that there are certain auctions where only 'write off' cars are sold, that is those that are sold for scrap because they have been in accidents. I also know that different auction houses will record data differently.

The above simple rule easily identifies cars that are more than likely going to be 'knocked' - but this is probably because they are 'knocked' in the first place (are we saying that someone in a coma is more likely to die). Is this useful? Is this a poorly defined definition of what is 'knocked'? Why does a missing value for WheelTypeID make such a big difference between auction houses?

A bit more digging reveals location and the specific buyer drills down on these gimmees even more...

[WheelTypeID] = 'NULL' AND [Auction] <> 'MANHEIM' AND [VNST] in ('NC','AZ') AND [BYRNO] NOT IN (99750,99761)

and after excluding these 'gimmees' it becomes clear there are certain buyers that just don't but knocked cars, especially 99750 and 99761...

byrno = 99750 and VNST in ('SC','NC','UT','ID','PA','WV','MO','WA')

byrno = 99761 and Auction = 'MANHEIM'

byrno = 99761 and MAKE = 'SUZUKI'

byrno = 99761 and SIZE = 'VAN'

byrno = 99761 and VNST IN ('FL','VA')

Now is this actually useful?

The challenge of this competition is to predict if the car purchased at the Auction is a Kick (bad buy)

The model is going to focus on who bought the car rather than the characteristics of the car itself. What happens if buyers suddenly change their policy? Wouldn't we rather just go and speak to these buyers to understand what their policy is and hence get some business understanding? Why is specific auction house location so important? Is it because of the specific auction house itself or that specific cars are actually routed to specific places (this does happen).

Basically if this was a real client engagement I would be going back to them with a lot of questions to help me understand the data better so it can be used in a way that is going to be useful to them.

In Summary

When doing predictive modelling, you can throw the latest hot algorithm at a problem such as a GBM, Neural Net or Random Forest and get impressive results, but unless you thoroughly understand and account for the real dynamics of what is going on then the models could disastrously fail when these dynamics change. I find visualisation the key to spotting and interpreting these dynamics - which is why I would rather have a good data miner who knows what he is doing using free software over a poor data miner with the most expensive software - see http://analystfirst.com/analyst-first-101/

These new problems are always discovered by visualising the data in some way and spotting curious patterns.

Here are a three of examples...

1. Algorithmic Trading Challenge

The Algorithmic Trading Challenge is based on data from the London Stock Exchange and is about things called 'Liquidity Shocks'. I know nothing about these but we had data, so the first thing I did was plot a few graphs to see id I could get a better understanding of things.

The plot below shows the times these 'Liquidity Shocks' occur.

Now it is quite clear there is something going on at 1pm, 2:30pm, after 3:30pm and at 4pm.

Interestingly these spikes are only evident when all commodities are looked at together, they are not as obvious in any individual commodity.

My first question if I was solving a business problem would be to return to the business to get more insight in what was going on here. My initial thoughts were lunch breaks and the opening times of other Stock Exchanges around the world - as 3:30pm London time could be around opening time in New York.

Understanding the cause of these peaks is important as you would expect the reaction to them (the problem to solve) to be a function of the cause.

If we did discover it was the opening times of other exchanges, then I would ask for extra information like the specific dates, so I could calculate when these peaks would occur in the future when the clocks changed. We do not have this information at the current time, or even the day of the week (it can be inferred but not accurately as there will be public holidays when the exchanges are closed)

As it stands any models built could potentially fail on the leaderboard (or real life) data as our model might think 2:30pm is a special time, wheras really it is when another exchange opens, or when people come back from lunch. We need this causal information rather than just dealing with the effect - time differences change - lunch breaks may change.

The current competition data is potentially lacking the full information required to build a model that is as robust as possible over time.

2. Interesting Distributions

One of the first things I do when receiving a data set is to scan the distributions of all variables to sanity check them for anything that looks out of place - but still things can sneak past you.

The following is exam mark data in the range 0-100. If we bin it in 20 bins then things look reasonable, but if we zoom in then we get the 'what is going on here' question again. It is quite clear what is going on, but if exam marks is the thing we are trying to predict, how do we deal with this phenomenon and how would our algorithm cope looking at it blindly? And what if the pass mark changed or rules changes - the algorithm would fail. Again, we need to be aware of the underlying root cause and not just the effect.

3. Don't Get Kicked

This is another Kaggle Competition...

Kicked cars often result when there are tampered odometers, mechanical issues the dealer is not able to address, issues with getting the vehicle title from the seller, or some other unforeseen problem. Kick cars can be very costly to dealers after transportation cost, throw-away repair work, and market losses in reselling the vehicle.

Modelers who can figure out which cars have a higher risk of being kick can provide real value to dealerships trying to provide the best inventory selection possible to their customers.

The challenge of this competition is to predict if the car purchased at the Auction is a Kick (bad buy)

This is a binary classification task and a quick way to spot data issues with this type of problem is to throw it in a decision tree in order to spot what are called 'gimmees'. These are cases that are easily perfectly predictable and are more than often a result of giving prediction data that just shouldn't be there as it is not known at the time (future information) - an extraction issue that would result in a useless model (It is common that people think they have built really good predictive models using future information without really questioning why their models are so good!).

Another reason 'gimmees' occur are poorly defined target variables, that is not excluding certain cases (and example in target marketing would be not excluding dead people from your mailing list and then predicting they won't respond to your offer!)

After a bit of data prep I threw the Don't Get Kicked Data into a Tiberius Decision Tree - the visual below immediately tells me there are clear cut cases of cars that will be kicked - it is almost black and white.

These 'gimmees' can be described by the rules...

[WheelTypeID] = 'NULL' AND [Auction] <> 'MANHEIM'

MANHEIM is an auctioneering company where cars are auctioned - there are 2 main auctioneers in the data set plus 'other'.

Having worked extensively with car auction data before I know that there are certain auctions where only 'write off' cars are sold, that is those that are sold for scrap because they have been in accidents. I also know that different auction houses will record data differently.

The above simple rule easily identifies cars that are more than likely going to be 'knocked' - but this is probably because they are 'knocked' in the first place (are we saying that someone in a coma is more likely to die). Is this useful? Is this a poorly defined definition of what is 'knocked'? Why does a missing value for WheelTypeID make such a big difference between auction houses?

A bit more digging reveals location and the specific buyer drills down on these gimmees even more...

[WheelTypeID] = 'NULL' AND [Auction] <> 'MANHEIM' AND [VNST] in ('NC','AZ') AND [BYRNO] NOT IN (99750,99761)

and after excluding these 'gimmees' it becomes clear there are certain buyers that just don't but knocked cars, especially 99750 and 99761...

byrno = 99750 and VNST in ('SC','NC','UT','ID','PA','WV','MO','WA')

byrno = 99761 and Auction = 'MANHEIM'

byrno = 99761 and MAKE = 'SUZUKI'

byrno = 99761 and SIZE = 'VAN'

byrno = 99761 and VNST IN ('FL','VA')

Now is this actually useful?

The challenge of this competition is to predict if the car purchased at the Auction is a Kick (bad buy)

The model is going to focus on who bought the car rather than the characteristics of the car itself. What happens if buyers suddenly change their policy? Wouldn't we rather just go and speak to these buyers to understand what their policy is and hence get some business understanding? Why is specific auction house location so important? Is it because of the specific auction house itself or that specific cars are actually routed to specific places (this does happen).

Basically if this was a real client engagement I would be going back to them with a lot of questions to help me understand the data better so it can be used in a way that is going to be useful to them.

In Summary

When doing predictive modelling, you can throw the latest hot algorithm at a problem such as a GBM, Neural Net or Random Forest and get impressive results, but unless you thoroughly understand and account for the real dynamics of what is going on then the models could disastrously fail when these dynamics change. I find visualisation the key to spotting and interpreting these dynamics - which is why I would rather have a good data miner who knows what he is doing using free software over a poor data miner with the most expensive software - see http://analystfirst.com/analyst-first-101/

Friday, 16 December 2011

Two Become One

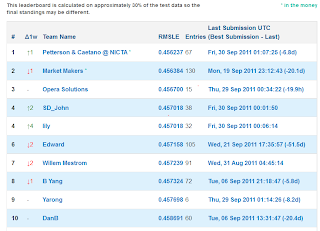

In the previous post I looked at the HHP leaderboard and discovered some interesting patterns regarding certain teams.

It looks as the evidence proved out to be true, with SD_John and Lily now all of a sudden merging into a single team.

Interestingly they have also been in other competitions with very similar results.

This was the final standing in the Give Me Some Credit competiton,

What is actually more interesting here is the demonstration of overfitting to the leaderboard. Opera Solutions & JYL are more than likely working together and we know Lily & SD_John are working together. If you look at the leaderboard just before the competition ended (on the 30%) you will see Opera near the top but the final position on the 70% was much worse. Similarly a few others found that relying on the leaderboard as an indication of the final position can be misplaced trust.

If you followed the competition forum, you will see team VSU also had multiple accounts for the same person, and they seem to have also fallen into the same trap of overfitting to the leaderboard - they ended up 9th on the 70% when they were first on the 30%.

The data mining lesson here is that you need to take all necessary steps to avoid overfitting, rather than just relying on the leaderboard feedback.

Congratulations to Nathaniel, Eu Jin (small world - I used to work with Nathaniel at the National Australia Bank and regularly see Eu Jin at the Melbourne R user group) and Alec, who clearly did not overfit. A Perfect Storm!

It looks as the evidence proved out to be true, with SD_John and Lily now all of a sudden merging into a single team.

Interestingly they have also been in other competitions with very similar results.

This was the final standing in the Give Me Some Credit competiton,

What is actually more interesting here is the demonstration of overfitting to the leaderboard. Opera Solutions & JYL are more than likely working together and we know Lily & SD_John are working together. If you look at the leaderboard just before the competition ended (on the 30%) you will see Opera near the top but the final position on the 70% was much worse. Similarly a few others found that relying on the leaderboard as an indication of the final position can be misplaced trust.

If you followed the competition forum, you will see team VSU also had multiple accounts for the same person, and they seem to have also fallen into the same trap of overfitting to the leaderboard - they ended up 9th on the 70% when they were first on the 30%.

The data mining lesson here is that you need to take all necessary steps to avoid overfitting, rather than just relying on the leaderboard feedback.

Congratulations to Nathaniel, Eu Jin (small world - I used to work with Nathaniel at the National Australia Bank and regularly see Eu Jin at the Melbourne R user group) and Alec, who clearly did not overfit. A Perfect Storm!

Wednesday, 14 December 2011

Phantom of the Opera

There have been some recent announcements on Kaggle reminding competitors about the rules regarding teams and that a single person can't have muliple accounts in order to get around the daily submission limit.

I used the HHP leaderboard as an interesting data source to educate myself on the data manipulation capabilities in R and it became very evident that there was some curious behaviour going on.

From a data scientist viewpoint, this demonstrates the power of the human eye in picking up things that will give you the insight that an algorithm won't. In most (probably all) of my professional projects the important data issues and findings have been a result of looking at visualisations of the data and asking the question "what's going on here!".

The first curiosity on the leaderboard was by trying to discover if the competition was attracting new entrants by looking at the dates of the first submissions of entrants. The two plots below show different ways of looking at the same data. What is obvious is that the 29th Nov had an unusual number of new entrants.

and looked at in another way...

What's going on here?

If you look at the team name of the entrants it is clear that all these accounts are somewhat connected - so no real mystery as to the cause of the blip for this date.

"accnt002" "accnt003" "accnt004" "accnt005" "accnt006"

"accnt007" "accnt008" "accnt009" "cyclops" "Faber"

"Farbe" "Fortis" "glad5" "glad55" "gladiator"

"gladiator1" "gladiator2" "gladiator3" "jackie" "Kaggleacctk"

"KaggleK2" "sashik"

The next two plots show the scores of the first submission of teams.

What's going on here?

The common scores where the steps are seen are the all zeros benchmark, optimised constant benchmark and the code we posted in our writeup - so this is explained. There is another common first score which is another very simple model that many teams independently thought of.

What does raise an eyebrow from the cumulative plot is one team stands out as having a very impressive first score. This is team YARONG who posted a very impressive model of 0.457698 on the first attempt and it still remains their best score 22 attempts later. This is possible (you don't need to submit models to blend them if you have your own holdout set - see the IBM writeup in the KDD Cup Orange Challenge) but somewhat unlikely as we know from the writeups that an individual model will get you no where near this score.

If you look at the dates teams submit and look at some sort of correlation of entry dates, one team appears twice towards the top - SD_John, and they are also at the top of the leaderboard.

td.row td.col pairs correl

UCI-CS273A-RegAll Alex_Tot 27 0.9979902

rutgers HappyAcura 29 0.9978254

SD_John lily 34 0.9974190

Roger99 Krakozjabra 21 0.9956643

SD_John JYL 24 0.9950884

The_Cuckoo's_Nest NumberNinja 23 0.9931073

NumberNinja Chris_R 29 0.9924864

What's is going on here?

If you plot the submissions and scores you will see SD_John and Lily seem to perfectly track each other in both the days they submit, the times they submit and the scores they get.

And on one particular day they get exactly the same score within 5 minutes of each other...

SD_John and JYL seem to also track each other in submission dates. Interestingly JYL has a very similar profile to a member of Opera, and a little digging would suggest this is one and the same person.

So here we can hypothesize that SD_john, lily, JYL and Opera (and evidence also suggests many more teams) are collaborating in some way.

Interesting - all from following your nose, which is what good data mining is all about.

In conclusion, the top of the leaderboard is not really what it appears to be - which I hope will encourage others to keep trying.

The main reason for this investigation was to help me discover what R can do to manipulate data - and the answer is basically anything you want it to do. You first have to know what you want to achieve then do some Googling and you will find some code to help you somewhere.

I used the HHP leaderboard as an interesting data source to educate myself on the data manipulation capabilities in R and it became very evident that there was some curious behaviour going on.

From a data scientist viewpoint, this demonstrates the power of the human eye in picking up things that will give you the insight that an algorithm won't. In most (probably all) of my professional projects the important data issues and findings have been a result of looking at visualisations of the data and asking the question "what's going on here!".

The first curiosity on the leaderboard was by trying to discover if the competition was attracting new entrants by looking at the dates of the first submissions of entrants. The two plots below show different ways of looking at the same data. What is obvious is that the 29th Nov had an unusual number of new entrants.

and looked at in another way...

What's going on here?

If you look at the team name of the entrants it is clear that all these accounts are somewhat connected - so no real mystery as to the cause of the blip for this date.

"accnt002" "accnt003" "accnt004" "accnt005" "accnt006"

"accnt007" "accnt008" "accnt009" "cyclops" "Faber"

"Farbe" "Fortis" "glad5" "glad55" "gladiator"

"gladiator1" "gladiator2" "gladiator3" "jackie" "Kaggleacctk"

"KaggleK2" "sashik"

The next two plots show the scores of the first submission of teams.

What's going on here?

The common scores where the steps are seen are the all zeros benchmark, optimised constant benchmark and the code we posted in our writeup - so this is explained. There is another common first score which is another very simple model that many teams independently thought of.

What does raise an eyebrow from the cumulative plot is one team stands out as having a very impressive first score. This is team YARONG who posted a very impressive model of 0.457698 on the first attempt and it still remains their best score 22 attempts later. This is possible (you don't need to submit models to blend them if you have your own holdout set - see the IBM writeup in the KDD Cup Orange Challenge) but somewhat unlikely as we know from the writeups that an individual model will get you no where near this score.

If you look at the dates teams submit and look at some sort of correlation of entry dates, one team appears twice towards the top - SD_John, and they are also at the top of the leaderboard.

td.row td.col pairs correl

UCI-CS273A-RegAll Alex_Tot 27 0.9979902

rutgers HappyAcura 29 0.9978254

SD_John lily 34 0.9974190

Roger99 Krakozjabra 21 0.9956643

SD_John JYL 24 0.9950884

The_Cuckoo's_Nest NumberNinja 23 0.9931073

NumberNinja Chris_R 29 0.9924864

What's is going on here?

If you plot the submissions and scores you will see SD_John and Lily seem to perfectly track each other in both the days they submit, the times they submit and the scores they get.

And on one particular day they get exactly the same score within 5 minutes of each other...

SD_John and JYL seem to also track each other in submission dates. Interestingly JYL has a very similar profile to a member of Opera, and a little digging would suggest this is one and the same person.

So here we can hypothesize that SD_john, lily, JYL and Opera (and evidence also suggests many more teams) are collaborating in some way.

Interesting - all from following your nose, which is what good data mining is all about.

In conclusion, the top of the leaderboard is not really what it appears to be - which I hope will encourage others to keep trying.

The main reason for this investigation was to help me discover what R can do to manipulate data - and the answer is basically anything you want it to do. You first have to know what you want to achieve then do some Googling and you will find some code to help you somewhere.

Thursday, 17 November 2011

The Pack is Catching Up

If you have been keeping an eye on the leaderboard, then you will notice there has been apparently little activity since the milestone 1 deadline. On some occasions there has been no change in the top 40 positions for over one week. This is quite an eerie silence and I suspect there may be a lack of submissions so teams can merge (the total submissions of teams merging has to be less than the number of days the comp has been running).

There have also been some very interesting movements if you look closer - more to come on this in a later post.

If you look further down though, the pack is catching up. In the past six weeks more teams are heading towards the 0.461 mark, which is the point the early leaders got to straight away and was the score to beat. Now it is only a top 50 place.

This score is a good single model. To improve dramatically from there though it is probably necessary to ensemble various models. What is pretty clear though is that the benchmark of 0.40 for the 3 million is impossible (hopefully this might be adjusted?).

I tried to put some nice colours in the chart below, which is generated in R, but could not find any up to date listing of colour codes in R. This is one of the disadvantages of the open source movement - documentation is very low on the contributors list of priorities (and what documentation there is leads a lot to be desired if R is to be used by 'regular' types of people).

I did find the following link though, which is where I got the colours for the plot,

http://colorbrewer2.org/

click on the image to enlarge

Friday, 7 October 2011

If you can't measure it, you can't manage it!

"If you can't measure it, you can't manage it!" - this is often quoted in the marketing and analytics world.

Apparently

"It comes from a remark attributed to GALILEO, who said 'Count what is

countable, measure what is measurable. What is not measurable, make

measurable'."

countable, measure what is measurable. What is not measurable, make

measurable'."

see here for more details on the origins of the quote.

Anyway, the point is that if you don't measure the before and after, you have no idea if the actions made a difference.

Last week in my previous blog post, I made available some code that would result in a score of around 0.4635 on the HHP leaderboard. The hope was that others would be able to get this code to work and re-ignite interest in the HHP and solving the worlds health problems.

When I looked at the leaderboard today, it looked on the face of it that this was happening, with a few high risers around the 0.4635 mark.

This was the after, but the before is not shown, so on this evidence there is a bit of a hole in the claim that the code was responsible for this change - we are only showing the part of the leaderboard that we want to - such movement could be going on all over the place.

Good old Jeff Moser at Kaggle has been hard at work making the leaderboard dynamic, so we can actually go back in time. Knowing this I modified a previous R script I posted to plot the leaderboard at 3 points in time, the day I released the code, and a week before and after (the new script can be found at the end of this post).

This resulted in the following 2 plots...

These are now a lot more convincing that the code did make a difference. If you follow Eu Jin Lok on the first plot, you will see he has improved his score but actually gone backwards in rank over the two week period. The second plot shows that the number of submissions to score 0.4635 has dropped.

Anyway, the point of this post is that data scientist have the power to make massive differences to the bottom lines of companies. I have been there and done it, but failed to get too excited as I am unassuming and knew exactly what the result would be because my models told me in advance. If analytics - and us analysts - are to be taken more seriously and given the recognition we deserve, we need to start banging our own drum more than we do. Those who get the recognition and rewards are not always those who do the best work, but those who shout the loudest!

And here is the R script to generate those plots...

And here is the R script to generate those plots...

########################################## # Some R function to plot the Kaggle # leaderboard at different points in time ########################################## plotKaggleLeaderboard <- function(theURL ,theDates ,myTeam ,topX=100 ,title ,plottype=1){ #this library does all the hard work #for windows users to install this packages see #http://cran.r-project.org/bin/windows/contrib/r-release/ReadMe #and http://www.stats.ox.ac.uk/pub/RWin/bin/windows/contrib/2.13/ library(XML) theColours <- c(552,254,26) for (i in 1:length(compDates)) { #go and read the tables from the web page thisDate <- paste(theURL,"?asOf=",compDates[i],sep="") tables <- readHTMLTable(thisDate) #get the table of interest #names(tables) lb <- tables[['leaderboard-table']] #see what the columns are #colnames(lb) #nrow(lb) #convert to numeric - see ?readHTMLTable #numeric_cols <- c('AUC','Entries') #numeric_cols <- c('RMSLE','Entries') numeric_cols <- c(4,5) lb[numeric_cols] = lapply(lb[numeric_cols], function(x) as.numeric(gsub(".* ", "", as.character(x)))) #extract the team name from a messy field team_col <- c('Team Name') #lb[team_col] #split the field by "\r\n" than denotes the break between fields within a field lb[,team_col] <- sapply(strsplit(as.character(lb[,team_col]), "[\r\n]"), function (x) x[1]) ##################### # plot ##################### myRank <- which(lb[team_col] == myTeam) myText = paste("following team",myTeam) error_data <- lb[,4] entries_data <- lb[,5] if(plottype==1){ if(i==1) plot(error_data[1:topX],col = theColours[i],type="l",xlab='Rank',ylab='Error',main = title, sub=myText) if(i>1) points(error_data[1:topX],col = theColours[i],type="l") #mark position points(myRank,error_data[myRank],col=theColours[i],pch=19) } if(plottype==2){ if(i==1){ plot(error_data[1:topX],entries_data[1:topX],type = 'p' ,main = title , xlab = 'Error' , ylab = 'Entries' , col= theColours[i] )} if(i>1) points(error_data[1:topX],entries_data[1:topX],type = 'p', col= theColours[i]) lines(lowess(error_data[1:topX],entries_data[1:topX]), col=theColours[i], lwd=2.5) # lowess line (x,y) #mark my position points(error_data[myRank],entries_data[myRank],col=theColours[i],pch=19) } } legend("topleft",legend=theDates,text.col=theColours) } ########################### # End of Function # plotKaggleLeaderboard ########################### compURL <- "http://www.heritagehealthprize.com/c/hhp/Leaderboard" compDates <- c('2011-09-24','2011-10-01','2011-10-07') compTeam <- 'Eu Jin Lok' compTopX <- 150 compTitle <- 'HHP Leaderboard Through Time' plotKaggleLeaderboard(theURL = compURL ,theDates = compDates ,myTeam = compTeam ,topX = compTopX ,title = compTitle ,plottype=1 )

Saturday, 1 October 2011

Code for a Respectable HHP Model

Our team Market Makers did well in the HHP first milestone prize, taking out top spot just above Willem Mestrom. Congratulations to all who took part.

For those of you who haven't seen the presentation, there is a recording at http://www.youtube.com/watch?v=xziMY3nDB_E

Reports were written on our methods and are available here...

http://www.heritagehealthprize.com/c/hhp/Leaderboard/milestone1

For those of you who haven't seen the presentation, there is a recording at http://www.youtube.com/watch?v=xziMY3nDB_E

Reports were written on our methods and are available here...

http://www.heritagehealthprize.com/c/hhp/Leaderboard/milestone1

Other competitors now have 30 days to read the reports and come back with any concerns.

In our report we included some source code, which is a bit of a pain to copy and paste, so I have reproduced it here. There are two bits of code to run, and at the end a file ready for submission should appear.

The first bit is the data preparation which I did in SQL. I used SQL Server, but it should be easy to run it in any SQL database without much messing around. This took about 8 minutes to run, and at the end is a table ready for use by the second part, which is the modelling performed in R, which takes about 15 minutes.

There are two modifications to the code to make - in the SQL you need to set the correct path to the source files - and in R you need to put in the correct server name so the data can be read directly from the database.

So hopefully in two mouse clicks there will be a file sitting there ready for submission.

If you are not sure about using SQL Server, then the earlier blog posts should point you in the right direction...

http://anotherdataminingblog.blogspot.com/2011/05/progress-loading-hhp-data.html

Note that the SQL provided does not generate all the variables we used - others were created, all using SQL, but the code was a little more complicated. The extra variables are described in our write up. Also the parameters used in the GBM modelling are not the most efficient. You will need more trees and a lower shrinkage factor to tease out a better model (see the R documentaion) - but this one runs in a reasonable time.

I am keen to see if this code is useful to anybody - if you can't get it to work then please post a comment hear. If you do use it then I would also like to hear from you!

SQL Code

/**************************************************************

* SQL Code to create an example data set for the HHP

*

* Edit the path in the 'bulk insert' commands to locate

* the source data

* The end result is a table called 'modelling_set' which can

* then be used to build predictive models

*

* created in SQL server express

* http://www.microsoft.com/sqlserver/en/us/editions/express.aspx

*****************************************************************/

/**************************

create a new database

**************************/

CREATE DATABASE HHP_comp

GO

USE HHP_comp

/**************************

load in the raw data

**************************/

--claims

CREATE TABLE Claims

(

MemberID VARCHAR(8) --integers starting with 0, could be text!

, ProviderID VARCHAR(7) --integers starting with 0, could be text!

, Vendor VARCHAR(6) --integers starting with 0, could be text!

, PCP VARCHAR(5) --integers starting with 0, could be text!

, Year VARCHAR(2)

, Specialty VARCHAR(25)

, PlaceSvc VARCHAR(19)

, PayDelay VARCHAR(4)

, LengthOfStay VARCHAR(10)

, DSFS VARCHAR(12)

, PrimaryConditionGroup VARCHAR(8)

, CharlsonIndex VARCHAR(3)

, ProcedureGroup VARCHAR(4)

, SupLOS TINYINT

)

BULK INSERT Claims

FROM 'F:\comps\hhp\raw data\HHP_release2\Claims.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

--members

CREATE TABLE Members

(

MemberID_M VARCHAR(8) --integers starting with 0, could be text!

, AgeAtFirstClaim VARCHAR(5)

, Sex VARCHAR(1)

)

BULK INSERT Members

FROM 'F:\comps\hhp\raw data\HHP_release2\Members.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

-- drug count

CREATE TABLE DrugCount

(

MemberID INT

, Year VARCHAR(2)

, DSFS VARCHAR(12)

, DrugCount VARCHAR(2)

)

BULK INSERT DrugCount

FROM 'F:\comps\hhp\raw data\HHP_release3\DrugCount.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

-- Lab Count

CREATE TABLE LabCount

(

MemberID INT

, Year VARCHAR(2)

, DSFS VARCHAR(12)

, LabCount VARCHAR(3)

)

BULK INSERT LabCount

FROM 'F:\comps\hhp\raw data\HHP_release3\LabCount.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

--DaysInHospital_Y2

CREATE TABLE DaysInHospital_Y2

(

MemberID INT

, ClaimsTruncated TINYINT

, DaysInHospital TINYINT

)

BULK INSERT DaysInHospital_Y2

FROM 'F:\comps\hhp\raw data\HHP_release2\DaysInHospital_Y2.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

-- DaysInHospital_Y3

CREATE TABLE DaysInHospital_Y3

(

MemberID INT

, ClaimsTruncated TINYINT

, DaysInHospital TINYINT

)

BULK INSERT DaysInHospital_Y3

FROM 'F:\comps\hhp\raw data\HHP_release2\DaysInHospital_Y3.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

-- Target

CREATE TABLE Target

(

MemberID INT

, ClaimsTruncated TINYINT

, DaysInHospital TINYINT

)

BULK INSERT Target

FROM 'F:\comps\hhp\raw data\HHP_release2\Target.csv'

WITH

(

MAXERRORS = 0,

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

/*************************

adjust the claims data to

convert text to integers

**************************/

-- PayDelay

ALTER TABLE Claims

ADD PayDelayI integer

GO

UPDATE Claims

SET PayDelayI = CASE WHEN PayDelay = '162+' THEN 162 ELSE CAST(PayDelay AS integer) END

--dsfs

ALTER TABLE Claims

ADD dsfsI integer

GO

UPDATE Claims

SET dsfsI =

CASE

WHEN dsfs = '0- 1 month' THEN 1

WHEN dsfs = '1- 2 months' THEN 2

WHEN dsfs = '2- 3 months' THEN 3

WHEN dsfs = '3- 4 months' THEN 4

WHEN dsfs = '4- 5 months' THEN 5

WHEN dsfs = '5- 6 months' THEN 6

WHEN dsfs = '6- 7 months' THEN 7

WHEN dsfs = '7- 8 months' THEN 8

WHEN dsfs = '8- 9 months' THEN 9

WHEN dsfs = '9-10 months' THEN 10

WHEN dsfs = '10-11 months' THEN 11

WHEN dsfs = '11-12 months' THEN 12

WHEN dsfs IS NULL THEN NULL

END

-- CharlsonIndex

ALTER TABLE Claims

ADD CharlsonIndexI INTEGER

GO

UPDATE Claims

SET CharlsonIndexI =

CASE

WHEN CharlsonIndex = '0' THEN 0

WHEN CharlsonIndex = '1-2' THEN 2

WHEN CharlsonIndex = '3-4' THEN 4

WHEN CharlsonIndex = '5+' THEN 6

END

-- LengthOfStay

ALTER TABLE Claims

ADD LengthOfStayI INTEGER

GO

UPDATE Claims

SET LengthOfStayI =

CASE

WHEN LengthOfStay = '1 day' THEN 1

WHEN LengthOfStay = '2 days' THEN 2

WHEN LengthOfStay = '3 days' THEN 3

WHEN LengthOfStay = '4 days' THEN 4

WHEN LengthOfStay = '5 days' THEN 5

WHEN LengthOfStay = '6 days' THEN 6

WHEN LengthOfStay = '1- 2 weeks' THEN 11

WHEN LengthOfStay = '2- 4 weeks' THEN 21

WHEN LengthOfStay = '4- 8 weeks' THEN 42

WHEN LengthOfStay = '26+ weeks' THEN 180

WHEN LengthOfStay IS NULL THEN null

END

/**************************

create a summary table

at the member/year level

***************************/

SELECT

year

,Memberid

,COUNT(*) AS no_Claims

,COUNT(DISTINCT ProviderID) AS no_Providers

,COUNT(DISTINCT Vendor) AS no_Vendors

,COUNT(DISTINCT PCP) AS no_PCPs

,COUNT(DISTINCT PlaceSvc) AS no_PlaceSvcs

,COUNT(DISTINCT Specialty) AS no_Specialities

,COUNT(DISTINCT PrimaryConditionGroup) AS no_PrimaryConditionGroups

,COUNT(DISTINCT ProcedureGroup) AS no_ProcedureGroups

,MAX(PayDelayI) AS PayDelay_max

,MIN(PayDelayI) AS PayDelay_min

,AVG(PayDelayI) AS PayDelay_ave

,(CASE WHEN COUNT(*) = 1 THEN 0 ELSE STDEV(PayDelayI) END) AS PayDelay_stdev

,MAX(LengthOfStayI) AS LOS_max

,MIN(LengthOfStayI) AS LOS_min

,AVG(LengthOfStayI) AS LOS_ave

,(CASE WHEN COUNT(*) = 1 THEN 0 ELSE STDEV(LengthOfStayI) END) AS LOS_stdev

,SUM(CASE WHEN LENGTHOFSTAY IS NULL AND SUPLOS = 0 THEN 1 ELSE 0 END) AS LOS_TOT_UNKNOWN

,SUM(CASE WHEN LENGTHOFSTAY IS NULL AND SUPLOS = 1 THEN 1 ELSE 0 END) AS LOS_TOT_SUPRESSED

,SUM(CASE WHEN LENGTHOFSTAY IS NOT NULL THEN 1 ELSE 0 END) AS LOS_TOT_KNOWN

,MAX(dsfsI) AS dsfs_max

,MIN(dsfsI) AS dsfs_min

,MAX(dsfsI) - MIN(dsfsI) AS dsfs_range

,AVG(dsfsI) AS dsfs_ave

,(CASE WHEN COUNT(*) = 1 THEN 0 ELSE STDEV(dsfsI) END) AS dsfs_stdev

,MAX(CharlsonIndexI) AS CharlsonIndexI_max

,MIN(CharlsonIndexI) AS CharlsonIndexI_min

,AVG(CharlsonIndexI) AS CharlsonIndexI_ave

,MAX(CharlsonIndexI) - MIN(CharlsonIndexI) AS CharlsonIndexI_range

,(CASE WHEN COUNT(*) = 1 THEN 0 ELSE STDEV(CharlsonIndexI) END) AS CharlsonIndexI_stdev

,SUM(CASE WHEN PrimaryConditionGroup = 'MSC2a3' THEN 1 ELSE 0 END) AS pcg1

,SUM(CASE WHEN PrimaryConditionGroup = 'METAB3' THEN 1 ELSE 0 END) AS pcg2

,SUM(CASE WHEN PrimaryConditionGroup = 'ARTHSPIN' THEN 1 ELSE 0 END) AS pcg3

,SUM(CASE WHEN PrimaryConditionGroup = 'NEUMENT' THEN 1 ELSE 0 END) AS pcg4

,SUM(CASE WHEN PrimaryConditionGroup = 'RESPR4' THEN 1 ELSE 0 END) AS pcg5

,SUM(CASE WHEN PrimaryConditionGroup = 'MISCHRT' THEN 1 ELSE 0 END) AS pcg6

,SUM(CASE WHEN PrimaryConditionGroup = 'SKNAUT' THEN 1 ELSE 0 END) AS pcg7

,SUM(CASE WHEN PrimaryConditionGroup = 'GIBLEED' THEN 1 ELSE 0 END) AS pcg8

,SUM(CASE WHEN PrimaryConditionGroup = 'INFEC4' THEN 1 ELSE 0 END) AS pcg9

,SUM(CASE WHEN PrimaryConditionGroup = 'TRAUMA' THEN 1 ELSE 0 END) AS pcg10

,SUM(CASE WHEN PrimaryConditionGroup = 'HEART2' THEN 1 ELSE 0 END) AS pcg11

,SUM(CASE WHEN PrimaryConditionGroup = 'RENAL3' THEN 1 ELSE 0 END) AS pcg12

,SUM(CASE WHEN PrimaryConditionGroup = 'ROAMI' THEN 1 ELSE 0 END) AS pcg13

,SUM(CASE WHEN PrimaryConditionGroup = 'MISCL5' THEN 1 ELSE 0 END) AS pcg14

,SUM(CASE WHEN PrimaryConditionGroup = 'ODaBNCA' THEN 1 ELSE 0 END) AS pcg15

,SUM(CASE WHEN PrimaryConditionGroup = 'UTI' THEN 1 ELSE 0 END) AS pcg16

,SUM(CASE WHEN PrimaryConditionGroup = 'COPD' THEN 1 ELSE 0 END) AS pcg17

,SUM(CASE WHEN PrimaryConditionGroup = 'GYNEC1' THEN 1 ELSE 0 END) AS pcg18

,SUM(CASE WHEN PrimaryConditionGroup = 'CANCRB' THEN 1 ELSE 0 END) AS pcg19

,SUM(CASE WHEN PrimaryConditionGroup = 'FXDISLC' THEN 1 ELSE 0 END) AS pcg20

,SUM(CASE WHEN PrimaryConditionGroup = 'AMI' THEN 1 ELSE 0 END) AS pcg21

,SUM(CASE WHEN PrimaryConditionGroup = 'PRGNCY' THEN 1 ELSE 0 END) AS pcg22

,SUM(CASE WHEN PrimaryConditionGroup = 'HEMTOL' THEN 1 ELSE 0 END) AS pcg23

,SUM(CASE WHEN PrimaryConditionGroup = 'HEART4' THEN 1 ELSE 0 END) AS pcg24

,SUM(CASE WHEN PrimaryConditionGroup = 'SEIZURE' THEN 1 ELSE 0 END) AS pcg25

,SUM(CASE WHEN PrimaryConditionGroup = 'APPCHOL' THEN 1 ELSE 0 END) AS pcg26

,SUM(CASE WHEN PrimaryConditionGroup = 'CHF' THEN 1 ELSE 0 END) AS pcg27

,SUM(CASE WHEN PrimaryConditionGroup = 'GYNECA' THEN 1 ELSE 0 END) AS pcg28

,SUM(CASE WHEN PrimaryConditionGroup IS NULL THEN 1 ELSE 0 END) AS pcg29

,SUM(CASE WHEN PrimaryConditionGroup = 'PNEUM' THEN 1 ELSE 0 END) AS pcg30

,SUM(CASE WHEN PrimaryConditionGroup = 'RENAL2' THEN 1 ELSE 0 END) AS pcg31

,SUM(CASE WHEN PrimaryConditionGroup = 'GIOBSENT' THEN 1 ELSE 0 END) AS pcg32

,SUM(CASE WHEN PrimaryConditionGroup = 'STROKE' THEN 1 ELSE 0 END) AS pcg33

,SUM(CASE WHEN PrimaryConditionGroup = 'CANCRA' THEN 1 ELSE 0 END) AS pcg34

,SUM(CASE WHEN PrimaryConditionGroup = 'FLaELEC' THEN 1 ELSE 0 END) AS pcg35

,SUM(CASE WHEN PrimaryConditionGroup = 'MISCL1' THEN 1 ELSE 0 END) AS pcg36

,SUM(CASE WHEN PrimaryConditionGroup = 'HIPFX' THEN 1 ELSE 0 END) AS pcg37

,SUM(CASE WHEN PrimaryConditionGroup = 'METAB1' THEN 1 ELSE 0 END) AS pcg38

,SUM(CASE WHEN PrimaryConditionGroup = 'PERVALV' THEN 1 ELSE 0 END) AS pcg39

,SUM(CASE WHEN PrimaryConditionGroup = 'LIVERDZ' THEN 1 ELSE 0 END) AS pcg40

,SUM(CASE WHEN PrimaryConditionGroup = 'CATAST' THEN 1 ELSE 0 END) AS pcg41

,SUM(CASE WHEN PrimaryConditionGroup = 'CANCRM' THEN 1 ELSE 0 END) AS pcg42

,SUM(CASE WHEN PrimaryConditionGroup = 'PERINTL' THEN 1 ELSE 0 END) AS pcg43

,SUM(CASE WHEN PrimaryConditionGroup = 'PNCRDZ' THEN 1 ELSE 0 END) AS pcg44

,SUM(CASE WHEN PrimaryConditionGroup = 'RENAL1' THEN 1 ELSE 0 END) AS pcg45

,SUM(CASE WHEN PrimaryConditionGroup = 'SEPSIS' THEN 1 ELSE 0 END) AS pcg46

,SUM(CASE WHEN Specialty = 'Internal' THEN 1 ELSE 0 END) AS sp1

,SUM(CASE WHEN Specialty = 'Laboratory' THEN 1 ELSE 0 END) AS sp2

,SUM(CASE WHEN Specialty = 'General Practice' THEN 1 ELSE 0 END) AS sp3

,SUM(CASE WHEN Specialty = 'Surgery' THEN 1 ELSE 0 END) AS sp4

,SUM(CASE WHEN Specialty = 'Diagnostic Imaging' THEN 1 ELSE 0 END) AS sp5

,SUM(CASE WHEN Specialty = 'Emergency' THEN 1 ELSE 0 END) AS sp6

,SUM(CASE WHEN Specialty = 'Other' THEN 1 ELSE 0 END) AS sp7

,SUM(CASE WHEN Specialty = 'Pediatrics' THEN 1 ELSE 0 END) AS sp8

,SUM(CASE WHEN Specialty = 'Rehabilitation' THEN 1 ELSE 0 END) AS sp9

,SUM(CASE WHEN Specialty = 'Obstetrics and Gynecology' THEN 1 ELSE 0 END) AS sp10

,SUM(CASE WHEN Specialty = 'Anesthesiology' THEN 1 ELSE 0 END) AS sp11

,SUM(CASE WHEN Specialty = 'Pathology' THEN 1 ELSE 0 END) AS sp12

,SUM(CASE WHEN Specialty IS NULL THEN 1 ELSE 0 END) AS sp13

,SUM(CASE WHEN ProcedureGroup = 'EM' THEN 1 ELSE 0 END ) AS pg1

,SUM(CASE WHEN ProcedureGroup = 'PL' THEN 1 ELSE 0 END ) AS pg2

,SUM(CASE WHEN ProcedureGroup = 'MED' THEN 1 ELSE 0 END ) AS pg3

,SUM(CASE WHEN ProcedureGroup = 'SCS' THEN 1 ELSE 0 END ) AS pg4

,SUM(CASE WHEN ProcedureGroup = 'RAD' THEN 1 ELSE 0 END ) AS pg5

,SUM(CASE WHEN ProcedureGroup = 'SDS' THEN 1 ELSE 0 END ) AS pg6

,SUM(CASE WHEN ProcedureGroup = 'SIS' THEN 1 ELSE 0 END ) AS pg7

,SUM(CASE WHEN ProcedureGroup = 'SMS' THEN 1 ELSE 0 END ) AS pg8

,SUM(CASE WHEN ProcedureGroup = 'ANES' THEN 1 ELSE 0 END ) AS pg9

,SUM(CASE WHEN ProcedureGroup = 'SGS' THEN 1 ELSE 0 END ) AS pg10

,SUM(CASE WHEN ProcedureGroup = 'SEOA' THEN 1 ELSE 0 END ) AS pg11

,SUM(CASE WHEN ProcedureGroup = 'SRS' THEN 1 ELSE 0 END ) AS pg12

,SUM(CASE WHEN ProcedureGroup = 'SNS' THEN 1 ELSE 0 END ) AS pg13

,SUM(CASE WHEN ProcedureGroup = 'SAS' THEN 1 ELSE 0 END ) AS pg14

,SUM(CASE WHEN ProcedureGroup = 'SUS' THEN 1 ELSE 0 END ) AS pg15

,SUM(CASE WHEN ProcedureGroup IS NULL THEN 1 ELSE 0 END ) AS pg16

,SUM(CASE WHEN ProcedureGroup = 'SMCD' THEN 1 ELSE 0 END ) AS pg17

,SUM(CASE WHEN ProcedureGroup = 'SO' THEN 1 ELSE 0 END ) AS pg18

,SUM(CASE WHEN PlaceSvc = 'Office' THEN 1 ELSE 0 END) AS ps1

,SUM(CASE WHEN PlaceSvc = 'Independent Lab' THEN 1 ELSE 0 END) AS ps2

,SUM(CASE WHEN PlaceSvc = 'Urgent Care' THEN 1 ELSE 0 END) AS ps3

,SUM(CASE WHEN PlaceSvc = 'Outpatient Hospital' THEN 1 ELSE 0 END) AS ps4

,SUM(CASE WHEN PlaceSvc = 'Inpatient Hospital' THEN 1 ELSE 0 END) AS ps5

,SUM(CASE WHEN PlaceSvc = 'Ambulance' THEN 1 ELSE 0 END) AS ps6

,SUM(CASE WHEN PlaceSvc = 'Other' THEN 1 ELSE 0 END) AS ps7

,SUM(CASE WHEN PlaceSvc = 'Home' THEN 1 ELSE 0 END) AS ps8

,SUM(CASE WHEN PlaceSvc IS NULL THEN 1 ELSE 0 END) AS ps9

INTO claims_per_member

FROM Claims

GROUP BY year,Memberid

-- remove some nulls

UPDATE claims_per_member

SET LOS_max = 0 WHERE LOS_max IS NULL

UPDATE claims_per_member

SET LOS_min = 0 WHERE LOS_min IS NULL

UPDATE claims_per_member

SET LOS_ave = 0 WHERE LOS_ave IS NULL

UPDATE claims_per_member

SET LOS_stdev = -1 WHERE LOS_stdev IS NULL

UPDATE claims_per_member

SET dsfs_max = 0 WHERE dsfs_max IS NULL

UPDATE claims_per_member

SET dsfs_min = 0 WHERE dsfs_min IS NULL

UPDATE claims_per_member

SET dsfs_ave = 0 WHERE dsfs_ave IS NULL

UPDATE claims_per_member

SET dsfs_stdev = -1 WHERE dsfs_stdev IS NULL

UPDATE claims_per_member

SET dsfs_range = -1 WHERE dsfs_range IS NULL

UPDATE claims_per_member

SET CharlsonIndexI_range = -1 WHERE CharlsonIndexI_range IS NULL

/***********************************

Members

***********************************/

-- create binary flags for age

ALTER TABLE Members ADD age_05 INT

ALTER TABLE Members ADD age_15 INT

ALTER TABLE Members ADD age_25 INT

ALTER TABLE Members ADD age_35 INT

ALTER TABLE Members ADD age_45 INT

ALTER TABLE Members ADD age_55 INT

ALTER TABLE Members ADD age_65 INT

ALTER TABLE Members ADD age_75 INT

ALTER TABLE Members ADD age_85 INT

ALTER TABLE Members ADD age_MISS INT

GO

UPDATE Members SET age_05 = CASE WHEN ageATfirstclaim = '0-9' THEN 1 ELSE 0 END

UPDATE Members SET age_15 = CASE WHEN ageATfirstclaim = '10-19' THEN 1 ELSE 0 END

UPDATE Members SET age_25 = CASE WHEN ageATfirstclaim = '20-29' THEN 1 ELSE 0 END

UPDATE Members SET age_35 = CASE WHEN ageATfirstclaim = '30-39' THEN 1 ELSE 0 END

UPDATE Members SET age_45 = CASE WHEN ageATfirstclaim = '40-49' THEN 1 ELSE 0 END

UPDATE Members SET age_55 = CASE WHEN ageATfirstclaim = '50-59' THEN 1 ELSE 0 END

UPDATE Members SET age_65 = CASE WHEN ageATfirstclaim = '60-69' THEN 1 ELSE 0 END

UPDATE Members SET age_75 = CASE WHEN ageATfirstclaim = '70-79' THEN 1 ELSE 0 END

UPDATE Members SET age_85 = CASE WHEN ageATfirstclaim = '80+' THEN 1 ELSE 0 END

UPDATE Members SET age_MISS = CASE WHEN ageATfirstclaim IS NULL THEN 1 ELSE 0 END

--create binary flags for sex

ALTER TABLE Members

ADD sexMALE INT

GO

UPDATE Members

SET SexMALE =

CASE

WHEN Sex = 'M' THEN 1 ELSE 0

END

ALTER TABLE Members

ADD sexFEMALE INT

GO

UPDATE Members

SET SexFEMALE =

CASE

WHEN Sex = 'F' THEN 1 ELSE 0

END

ALTER TABLE Members

ADD sexMISS INT

GO

UPDATE Members

SET SexMISS =

CASE

WHEN Sex IS NULL THEN 1 ELSE 0

END

/******************

DRUG COUNTS

******************/

-- convert to integers

ALTER TABLE drugcount ADD DrugCountI INT

GO

UPDATE DRUGCOUNT

SET DrugCountI =

CASE WHEN DrugCount = '7+' THEN 7 ELSE DrugCount END

SELECT

memberID AS memberID_dc

,Year AS YEAR_dc

,MAX(drugcountI) AS drugCount_max

,MIN(drugcountI) AS drugCount_min

,AVG(drugcountI * 1.0) AS drugCount_ave

,COUNT(*) AS drugcount_months

INTO DRUGCOUNT_SUMMARY

FROM

drugcount

GROUP BY

memberID

,Year

/******************

LAB COUNTS

******************/

-- convert to integers

ALTER TABLE LabCount ADD LabCountI INT

GO

UPDATE LabCount

SET LabCountI =

CASE WHEN LabCount = '10+' THEN 10 ELSE LabCount END

SELECT

memberID AS memberID_lc

,Year AS YEAR_lc

,MAX(labcountI) AS labCount_max

,MIN(labcountI) AS labCount_min

,AVG(labcountI * 1.0) AS labCount_ave

,COUNT(*) AS labcount_months

INTO LABCOUNT_SUMMARY

FROM

labcount

GROUP BY

memberID

,Year

/********************************

Targets

********************************/

SELECT *

INTO DIH

FROM

(

SELECT

MemberID AS MemberID_t

,'Y1' AS YEAR_t

,ClaimsTruncated

,DaysInHospital

,1 AS trainset

FROM DaysInHospital_Y2

UNION ALL

SELECT

MemberID AS MemberID_t

,'Y2' AS YEAR_t

,ClaimsTruncated

,DaysInHospital

,1 AS trainset

FROM DaysInHospital_Y3

UNION ALL

SELECT

MemberID AS MemberID_t

,'Y3' AS YEAR_t

,ClaimsTruncated

,null AS DaysInHospital

,0 AS trainset

FROM Target

) a

/*****************************

Now merge them all together to

create the modeling data SET

******************************/

SELECT a.*,b.*

INTO #temp1

FROM

DIH a

LEFT OUTER JOIN

members b

on a.MemberID_t = B.Memberid_M

ALTER TABLE #temp1 DROP COLUMN Memberid_M

ALTER TABLE #temp1 DROP COLUMN AgeAtFirstClaim

ALTER TABLE #temp1 DROP COLUMN Sex

GO

SELECT a.*,b.*

INTO #temp2

FROM

#temp1 a

LEFT OUTER JOIN

claims_per_member b

on a.MemberID_t = B.Memberid

AND a.YEAR_t = b.year

ALTER TABLE #temp2 DROP COLUMN Memberid

ALTER TABLE #temp2 DROP COLUMN year

GO

SELECT a.*,b.*

INTO #temp3

FROM

#temp2 a

LEFT OUTER JOIN

DRUGCOUNT_SUMMARY b

on a.MemberID_t = B.Memberid_dc

AND a.YEAR_t = b.YEAR_dc

ALTER TABLE #temp3 DROP COLUMN Memberid_dc

ALTER TABLE #temp3 DROP COLUMN YEAR_dc

GO

SELECT a.*,b.*

INTO #temp4

FROM

#temp3 a

LEFT OUTER JOIN

LABCOUNT_SUMMARY b

on a.MemberID_t = B.Memberid_lc

AND a.YEAR_t = b.YEAR_lc

ALTER TABLE #temp4 DROP COLUMN Memberid_lc

ALTER TABLE #temp4 DROP COLUMN YEAR_lc

GO

-- removel nulls for those who had

-- no lab or drug information

ALTER TABLE #temp4 ADD labNull INT

ALTER TABLE #temp4 ADD drugNull INT

GO

UPDATE #temp4 SET labNull = 0

UPDATE #temp4 SET labNull = 1 WHERE labCount_max IS NULL

UPDATE #temp4 SET drugNull = 0

UPDATE #temp4 SET drugNull = 1 WHERE drugCount_max IS NULL

UPDATE #temp4 SET labCount_max = 0 WHERE labCount_max IS NULL

UPDATE #temp4 SET labCount_min = 0 WHERE labCount_min IS NULL

UPDATE #temp4 SET labCount_ave = 0 WHERE labCount_ave IS NULL

UPDATE #temp4 SET labcount_months = 0 WHERE labcount_months IS NULL

UPDATE #temp4 SET drugCount_max = 0 WHERE drugCount_max IS NULL

UPDATE #temp4 SET drugCount_min = 0 WHERE drugCount_min IS NULL

UPDATE #temp4 SET drugCount_ave = 0 WHERE drugCount_ave IS NULL

UPDATE #temp4 SET drugcount_months = 0 WHERE drugcount_months IS NULL

SELECT *

INTO modelling_set

FROM #temp4

R code

######################################## # Example GBM model for HHP # scores ~ 0.4635 on leaderboard # which would be 55th position of 510 # as at 9th Sept 2011 # # Requires the data having been prepared # using the SQL supplied # ######################################## starttime <- proc.time() ######################################## #load the data ######################################## library(RODBC) #set a connection to the database conn <- odbcDriverConnect("driver=SQL Server;database=HHP_comp;server=servername\\SQLEXPRESS;") #or this method involves setting up a DSN (Data Source Name) called HHP_comp #conn <- odbcConnect("HHP_comp") alldata <- sqlQuery(conn,"select * from modelling_set") ######################################## # arrange the data ######################################## #identify train and leaderboard data trainrows <- which(alldata$trainset == 1) scorerows <- which(alldata$trainset == 0) #sanity check the size of each set length(trainrows) length(scorerows) #display the column names colnames(alldata) #memberid is required as key for submission set memberid <- alldata[scorerows,'MemberID_t'] #remove redundant fields alldata$MemberID_t <- NULL alldata$YEAR_t <- NULL alldata$trainset <- NULL #target - what we are predicting theTarget <- 'DaysInHospital' #put the target on the log scale alldata[trainrows,theTarget] <- log1p(alldata[trainrows,theTarget]) #find the position of the target targindex <- which(names(alldata)==theTarget) ######################################## # build the model ######################################## #GBM model settings, these can be varied GBM_NTREES = 500 GBM_SHRINKAGE = 0.05 GBM_DEPTH = 4 GBM_MINOBS = 50 #build the GBM model library(gbm) GBM_model <- gbm.fit( x = alldata[trainrows,-targindex] ,y = alldata[trainrows,targindex] ,distribution = "gaussian" ,n.trees = GBM_NTREES ,shrinkage = GBM_SHRINKAGE ,interaction.depth = GBM_DEPTH ,n.minobsinnode = GBM_MINOBS ,verbose = TRUE) #list variable importance summary(GBM_model,GBM_NTREES) #predict for the leaderboard data prediction <- predict.gbm(object = GBM_model ,newdata = alldata[scorerows,-targindex] ,GBM_NTREES) #put on correct scale and cap prediction <- expm1(prediction) prediction <- pmin(15,prediction) prediction <- pmax(0,prediction) #plot the submission distribution hist(prediction, breaks=500) ######################################## #write the submission to file ######################################## submission <- cbind(memberid,prediction) colnames(submission) <- c("MemberID","DaysInHospital") fnname <- "C:\\GBM_demo1.csv" write.csv(submission, file=fnname, row.names = FALSE) elapsedtime <- proc.time() - starttime cat("\nFinished\n",elapsedtime)

Subscribe to:

Comments (Atom)